What War by A.I. Actually Looks Like

In November the left-wing Israeli outlets +972 magazine and Local Call published a disturbing investigation by the journalist Yuval Abraham into the Israel Defense Forces’ use of an artificial intelligence system for identifying targets in Gaza — which one former intelligence official described as a “mass assassination factory.”

Toward the end of a year clouded by visions of an A.I. apocalypse — visions that sometimes featured autonomous weapons systems going rogue — you might have expected an enormous and alarmed response. Instead, the report that a war was being conducted partly by A.I. made only a small ripple in debates over Israel’s conduct in Gaza.

Perhaps that was partly because — to an unnerving degree — experts accept that forms of A.I. are already in widespread use among the world’s leading militaries, including in the United States, where the Pentagon has been developing A.I. for military purposes at least since the Obama administration. According to Foreign Affairs, at least 30 countries now operate defense systems that have autonomous modes. Many of us still regard artificial intelligence wars as visions from a science-fiction future, but A.I. is already stitched into global military operations as surely as it’s stitched into the fabric of our everyday lives.

It’s not just out-of-control A.I. that poses a threat. Under-control systems can do harm, too. The Washington Post called the war in Ukraine a “super lab of invention,” marking a “revolution in drone warfare using A.I.” The Pentagon is developing a response to A.I.-powered drone swarms, a threat that has become less distant as it counters drone attacks by Yemeni Houthis in the Red Sea. According to The Associated Press, some analysts have suggested that it is only a matter of time before “drones will be used to identify, select and attack targets without help from humans.” Such swarms, directed by systems operating too fast for human oversight, “are about to change the balance of military power,” Elliot Ackerman and Admiral James Stavridis, a former allied commander of NATO, predicted in The Wall Street Journal last month. Others have suggested that future is here.

Like the invasion of Ukraine, the ferocious offensive in Gaza has looked at times like a throwback, in some ways more closely resembling a 20th-century total war than the counterinsurgencies and smart campaigns to which Americans have grown more accustomed. By December, nearly 70 percent of Gaza’s homes and more than half its buildings had been damaged or destroyed. Today fewer than one-third of its hospitals remain functioning, and 1.1 million Gazans are facing “catastrophic” food insecurity, according to the United Nations. It may look like an old-fashioned conflict, but the Israel Defense Forces’ offensive is also an ominous hint of the military future — both enacted and surveilled by technologies arising only since the war on terrorism began.

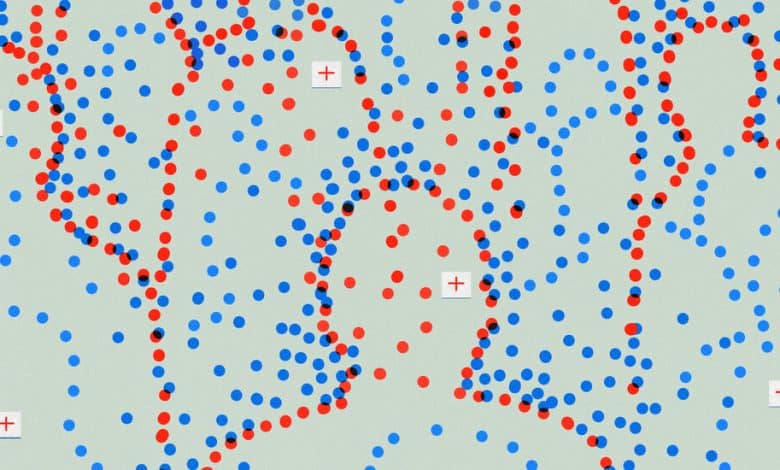

Last week +972 and Local Call published a follow-up investigation by Abraham, which is very much worth reading in full. (The Guardian also published a piece drawing from the same reporting, under the headline “The Machine Did It Coldly.” The reporting has been brought to the attention of John Kirby, the U.S. national security spokesman, and been discussed by Aida Touma-Sliman, an Israeli Arab member of the Knesset, and by the United Nations secretary general, António Guterres, who said he was “deeply troubled” by it.) The November report describes a system called Habsora (the Gospel), which, according to the current and former Israeli intelligence officers interviewed by Abraham, identifies “buildings and structures that the army claims militants operate from.” The new investigation, which has been contested by the Israel Defense Forces, documents another system, known as Lavender, used to compile a “kill list” of suspected combatants. The Lavender system, he writes, “has played a central role in the unprecedented bombing of Palestinians, especially during the early stages of the war.”