What to Know About Tech Companies Using A.I. to Teach Their Own A.I.

OpenAI, Google and other tech companies train their chatbots with huge amounts of data culled from books, Wikipedia articles, news stories and other sources across the internet. But in the future, they hope to use something called synthetic data.

That’s because tech companies may exhaust the high-quality text the internet has to offer for the development of artificial intelligence. And the companies are facing copyright lawsuits from authors, news organizations and computer programmers for using their works without permission. (In one such lawsuit, The New York Times sued OpenAI and Microsoft.)

Synthetic data, they believe, will help reduce copyright issues and boost the supply of training materials needed for A.I. Here’s what to know about it.

What is synthetic data?

It’s data generated by artificial intelligence.

Does that mean tech companies want A.I. to be trained by A.I.?

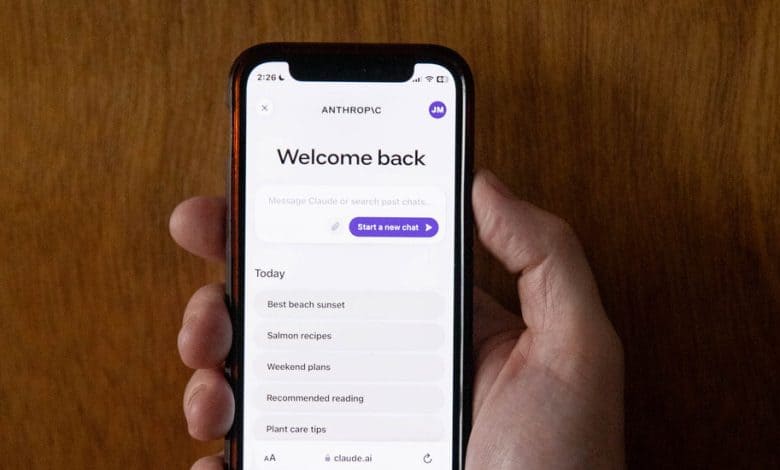

Yes. Rather than training A.I. models with text written by people, tech companies like Google, OpenAI and Anthropic hope to train their technology with data generated by other A.I. models.

Does synthetic data work?

Not exactly. A.I. models get things wrong and make stuff up. They have also shown that they pick up on the biases that appear in the internet data from which they have been trained. So if companies use A.I. to train A.I., they can end up amplifying their own flaws.

Is synthetic data widely used by tech companies right now?

No. Tech companies are experimenting with it. But because of the potential flaws of synthetic data, it is not a big part of the way A.I. systems are built today.